Implementation of Logistic Regression

As described here in the overview of logistic regression, the goal of this statistical tool is to parameterize a non-linear relationship between one or more independent variables, and the probability that a binary variable will be coded as 1 rather than 0 (e.g., the data value is yes rather than no, or diseased rather than without disease). This statistical tool uses the logit as its link function - the logit link function constrains the probability values (predictions of y) to a range from 0 - 1. Some software packages offer variants of logistic regression that can be applied to nominal response variables with more than two categories, but these advanced methods are not currently implemented in SpaceStat.

Obtaining the parameter estimates

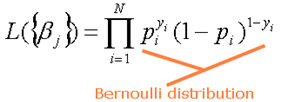

For logistic regression in SpaceStat, the regression formulation is carried out in terms of maximum likelihood (L) estimation. A "likelihood" is a probability (and must have a value within the range of 0 - 1); in this case the probability that the dependent variable can be predicted from the independent variables. As indicated in the equation below, the maximum likelihood estimator uses a Bernoulli distribution to define a joint probability distribution from the individual dependent variable observations. In the following equations, the brackets around the beta, which symbolizes the regression coefficients, indicate that we are estimating two or more regression coefficients.

The goal of maximum likelihood estimation is to maximize the Log-Likelihood (lnL), which has a value between 0 and negative infinity (negative, because you are taking the log of a value that is less than 1). Maximum likelihood estimation is an iterative process. Recall also from our overview that we are treating aspatial regression as a the "global" version of geographically-weighted regression; as a result, we need to account for weighting factors (w) in our estimation. The weighted log-likelihoods for logistic regression can be obtained by raising each individual probability to the power of a weight factor, taking the product over observations, and then taking the logarithm.

To estimate the regression coefficients, SpaceStat uses the Taylor expansion of the equation above, and then the maximum likelihood algorithm determines the direction and sign of changes in the regression coefficients which will increase the lnL. After starting from an arbitrary set of coefficient estimates, the initial function is estimated and the residuals are evaluated. From these results, the algorithm modifies the coefficient values, and generates a new set of residuals which are compared to previous values. This process continues until there is little change in the lnL. There is a possibility that this process will not lead to convergence due to what is called a "ridge-effect"; in this case, the Log-likelihood remains constant as coefficients are varied.

Evaluating the model

Overall model quality is assessed for logistic regression models using negative 2 times the natural log of the likelihood function (-2lnL); in general, as the model fit improves, -2lnL will decrease in magnitude. Other names for -2lnL include the likelihood ratio, deviance chi-square, and the model's measure of goodness of fit. Values of -2lnL have approximately a chi-square distribution, and as a result this distribution is used for significance testing of the overall model. In effect -2lnL plays the same role as the residual sum of squares (RSS) in linear regression (i.e., it reflects the unexplained variance between predicted and observed values). The -2lnL value forms the basis of the likelihood ratio test (described below), which as the name suggests, is a significance test based on the difference between the likelihood ratios of two forms of a model.

Measures of model fit

For logistic regression, SpaceStat presents two calculations that play the role of R-squared in linear regression. Thus, these values describe the strength of a particular suite of independent variables and coefficients as predictors of the log odds of obtaining a 1 at a particular value(s) of the independent variables. The first of these is the "Cox and Snell R-Squared", shown below. As the -2lnL value for the "Full" model ("Intercept" refers to the intercept-only model) increases, the Cox and Snell R-squared result will approach one. Since the result can never actually reach one, the "Nagelkerke R-Squared" is also shown; this descriptor takes Cox and Snell value and divides by the result with the model -2lnL set to zero.

Significance of the full model: the model chi-square test

For logistic regression a comparison is made between the value of -2lnL (likelihood ratio) of the full model and the likelihood ratio for a "worst model," defined as the model which fits the probability using a single constant value (the intercept only model). The significance of the difference between these two values of -2lnL is then evaluated using a chi-square distribution with the full model degrees of freedom, minus 1 for the constant, to obtain a p-value. The p-value that is returned for this test can then be compared to your pre-set alpha value (typically 0.05). A p-value that is smaller than your alpha indicates that the model you created is significantly better at predicting the log odds of obtaining "1" as your predicted value than a model based on just a constant value. Another way to think of this test is as a test of the null hypothesis that all of the regression coefficients in your model except for the constant equal zero.

Significance of individual terms in the regression model

For logistic regression, SpaceStat presents the parameter estimates, parameter standard errors and p-values (using a chi-squared distribution). As described above for evaluating the significance of the entire logistic regression model, likelihood ratio tests are used to evaluate the significance of individual parameters in the model. The basic idea of these significance tests is the same as the test of significance of the full model, except in this case the test is based on the difference in -2lnL for an overall model and a nested model where one independent variable has been dropped. If the test for a particular parameter is not significant, this means that the coefficient for that variable can be considered not significantly different from zero, and that you can drop this variable from your model without a reduction in model performance. Note that you can't use this approach to compare two non-nested models.